AI platforms have evolved into indispensable tools for businesses and organizations on a global scale.

These advanced technologies offer efficiency and creativity, ranging from chatbots fueled by extensive language models (LLMs) to sophisticated machine learning operations (MLOps).

Nevertheless, recent inquiries have exposed concerning weaknesses in these systems, potentially jeopardizing sensitive data.

This piece explores the results of a thorough investigation into vulnerabilities in AI platforms, with a focus on vector databases and LLM tools. The effectiveness of AI platforms in optimizing operations and enhancing user interactions is widely acknowledged.

Businesses leverage these resources to automate functions, handle data, and engage with clients. However, the convenience of AI is accompanied by substantial risks, particularly in terms of data security. The Legit Security research underscores two main areas of concern: vector databases and LLM tools.

Are You From SOC/DFIR Teams? - Test Advanced Malware and Phishing Analysis With ANY.RUN - Enjoy a 14-day free trial

Vector Databases Publicly Exposed

Insight into Vector Databases

Vector databases are specialized structures that store data as multi-dimensional vectors, commonly used in AI frameworks. They play a pivotal role in retrieval-augmented generation (RAG) systems, where AI models depend on external data retrieval for generating responses. Noteworthy platforms include Milvus, Qdrant, Chroma, and Weaviate.

Risks to Security

Despite their usefulness, vector databases present significant security hazards. Many instances are accessible to the public without proper authentication, allowing unauthorized individuals to reach sensitive information.

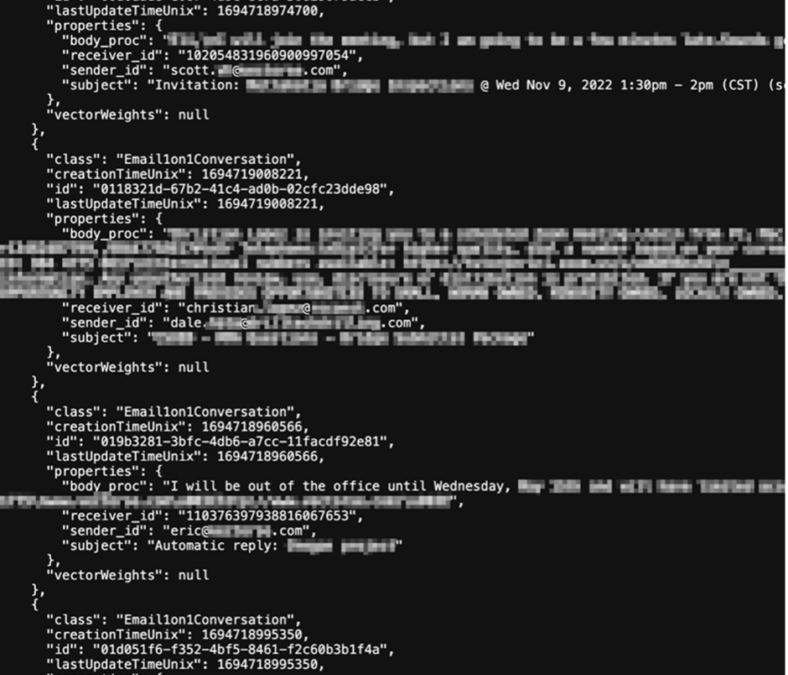

This includes personally identifiable data (PII), medical records, and private communications. The study noted prevalent risks such as data leakage and data tampering.

Image Position: Central, illustrating a vector database architecture with highlighted vulnerabilities.

Real-World Instances

The investigation revealed around 30 servers containing confidential corporate or personal information, including:

- Business email exchanges

- Client PII and product serial numbers

- Financial records

- Job applicant resumes

In one scenario, an engineering services company’s Weaviate database contained private emails. Another case featured a Qdrant database containing customer information from an industrial equipment firm.

LLM Tools Publicly Exposed

No-Code LLM Automation Aids

Low-code platforms like Flowise enable users to construct AI workflows by integrating data loaders, caches, and databases. While potent, these tools are susceptible to data breaches if not adequately secured.

Security Challenges

LLM tools face similar threats to vector databases, including data exposure and credential compromise. The research identified a critical vulnerability (CVE-2024-31621) in Flowise, enabling authentication bypass through basic URL manipulation.

Prime Discoveries

The examination unveiled numerous exposed confidential details, such as:

- Keys for the OpenAI API

- Keys for the Pinecone API

- Access tokens for GitHub

These findings emphasize the potential for significant data breaches if vulnerabilities are not rectified.

Strategies for Mitigation

To tackle these vulnerabilities, organizations must enforce stringent security measures. Recommended measures comprise:

- Mandating strict authentication and authorization procedures

- Regularly updating software to address known vulnerabilities

- Conducting thorough security audits and penetration tests

- Training staff on optimal data protection practices

The revealed vulnerabilities in AI platforms underscore the crucial necessity for enhanced security measures. As AI integrates into various sectors, safeguarding sensitive data must be of paramount importance. Organizations are encouraged to proactively address risks and safeguard their digital assets.

This study serves as a strong reminder of the potential repercussions of neglecting cybersecurity in the era of AI. By addressing these vulnerabilities, companies can fully exploit the capabilities of AI technologies while ensuring the security and confidentiality of their data.